Merry X-Mas! The Splitblog in December

What would December be without perfectly polished year-end reviews and Christmas greetings? Of course, we’re joining in. Because yes, we can all do without hackneyed phrases and oh-so-glorifying descriptions. But what is important is to occasionally look back, and December is simply predestined for that. So let’s go.

Working in a startup sometimes feels like reaching for the stars. You stretch, higher and higher, strive to get just a little bit further, and in the end, it’s still not enough. Again. And what do you do then? Take a deep breath, gather your strength, and try again. Support is extremely important in this process.

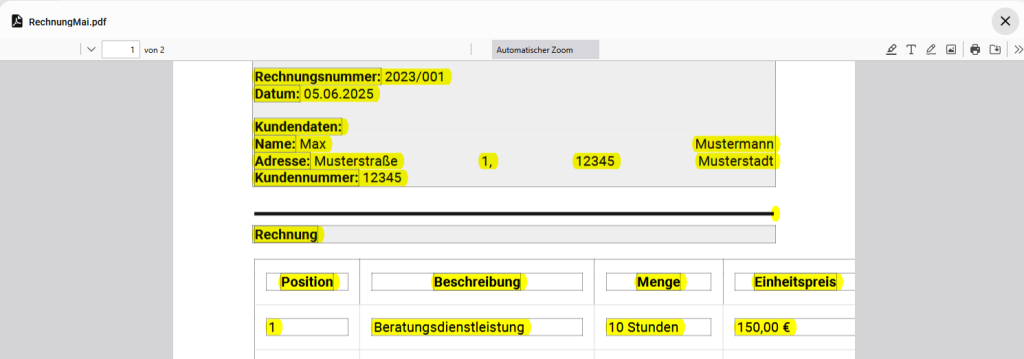

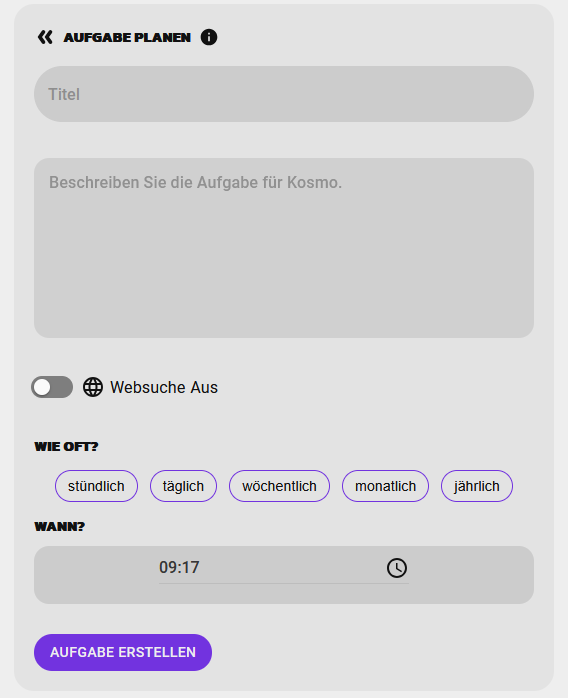

We were pleased to receive such support at the beginning of the year when our submitted project “Chatbot meets administration: Intelligent dialogue systems as a future solution for non-profit organizations” was approved by the Federal Ministry of Labor and Social Affairs as part of the Civic Innovation Platform. Together with the Kinderschutzbund Kreisverband Ostholstein e.V., the Landesverband der Kleingartenvereine Schleswig-Holsteins e.V., InMotion e.V. and the Ostsee-Holstein-Tourismus e.V., we are working on EVA – an AI-supported chatbot that is intended to make association work easier. EVA is now ready for testing and available as open source software. This cooperation helps us to take a broad spectrum of needs into account during development. We are very grateful for the time invested by the participating associations, the positive exchange and the feedback. The uncomplicated help from our external consultants from the Zukunftslabor Generative KI, from DSS IT Security and the Lauprecht law firm is also worth its weight in gold.

We also successfully concluded some important contracts this year, which have shown us that we are on the right track and that our idea meets a real need.

What also helps: Recognition. And we also received this in the form of 1st place in the Digitalization Prize of Schleswig-Holstein, personally presented by Dirk Schrödter. Such a trophy not only looks pretty when it is illuminated in the meeting room, but also has an incredible symbolic power. A “courage award”, as Mr. Schrödter aptly put it. Because that is exactly what can make the difference: The courage to keep trying.

As a team, we have achieved a lot this year and have grown even closer in some areas. Because if you endure setbacks together, the successes are all the more enjoyable. Each and every one of us has gone more than just the extra mile in recent months. Our trainee Ramtin is to be mentioned here as a representative of all. Ramtin, who never has to be asked and whose commitment goes far beyond the standard. So it is hardly surprising that he can look forward to a permanent contract long before the end of his training.

The Web Summit in Lisbon, in which we were able to participate as part of the de:hub Initiative, was also an inspiring event. Anyone who has been there will know this impressive atmosphere and spirit.

And then there’s Friedrich, who has not only accompanied us and Kontor Business IT since the beginning, but has also supported us with all his might. He completed his master’s degree this year and is now running Splitbot together with Tadeusz.

Well, and now? We probably don’t have it in our hands yet, our star. But it is within reach. And we will do everything we can to reach it next year too. With the goal in mind and our network and our team behind us.